When talking about GPU benchmarks, games are typically the first association that comes to mind. Why? Because when it comes to GPU benchmarks, GPUs and games are like peanut butter and jelly, they just go hand in hand. After all, games are among the most demanding applications for a system. They utilize high-end graphics that demand significant resources and computational power. So, using a game as a benchmark for system requirements isn’t far-fetched. It is straightforward, since you can assume that the faster the game runs, the better your system performs. Because of this, game studios have started to include benchmarks into their titles – gaming benchmarks.

Why do gaming benchmarks exist?

It’s not that uncommon that software products include additional utilities (usually optional) in their installation packages. In a similar manner, some games include benchmarking tools which allow the end-users to determine how fit their own system configuration is for running that particular title. At first glance, it makes total sense. What better way is there to check how well the game runs on your machine, than… running the actual game?

Thus, it would be quite annoying to have some score output showing at regular intervals, or a fps counter displayed on screen when playing the game. Therefore, having a separate application which can do that by using the entire game content is more attractive.

The advantage of such a benchmark, that uses the game assets, is obvious: it uses the game itself as a reference. Apart from convenience and relevance, there are also a few other reasons why game studios include benchmarking tools in their game title.

It would be utterly disappointing if a new game was released but it wouldn’t be compatible with any devices on the market. To ensure that this doesn’t happen, game studios often collaborate with different device vendors to ensure that their devices are compatible. Since games are pushing the limits on device performance, it forces the device manufacturers to adapt and respond to the new requirements. And since device manufacturers don’t have time to test the device performance by playing a game from start to end, a gaming benchmark is a much more efficient way to test the performance of the device.

Additionally, device manufacturers cannot afford to wait until a game is ready for market release to optimize their devices, as that would be too late. Instead, they receive a “sample” of the game, known as the benchmark workload, while the game is still in development.

Since this “sample” of the games already exists for device manufacturers, the next logical step was to enable it for end-users as well.

How good are gaming benchmarks and is it different to standard benchmarks?

When talking about benchmarks in general versus gaming benchmarks, there are a few important aspects which need to be taken into consideration.

Reproducible results

The most crucial aspect of benchmark results is their repeatability. The slightest change in the rendered workload makes the result non-reproducible, therefore the results are invalidated with each run. While rendering a workload that doesn’t repeat itself identically each time can provide some estimation of performance, the results are questionable as they vary with each run. Incorporating NPC AI (non-player character artificial intelligence) and physics, which govern the behavior and decision-making of characters that are not controlled by the player, is common in most games. However, these elements introduce variability, as they generate different scenarios each time. Consequently, this variability leads to differing performance results in each run.

Standard GPU benchmarks, on the other hand, utilize a predetermined scene where all animations are baked, eliminating the variability of rendering different scenes in each run, which ensures the reproducibility of results. Thus, some tools can enable the use of free camera mode, but this generates completely incomparable results. For example, if you place the camera in a specific corner of the scene where there is hardly anything heavy to render, the performance might be high. Conversely, it can produce the opposite effect, resulting in very low performance. For standard GPU benchmarks, the results may have a <5% variation due to, e.g., the heating of the device. Thus, in comparison, some gaming benchmarks can have as much as 20% performance variation between the runs, according to an article in Tom’s Hardware.

Consistency across different test runs

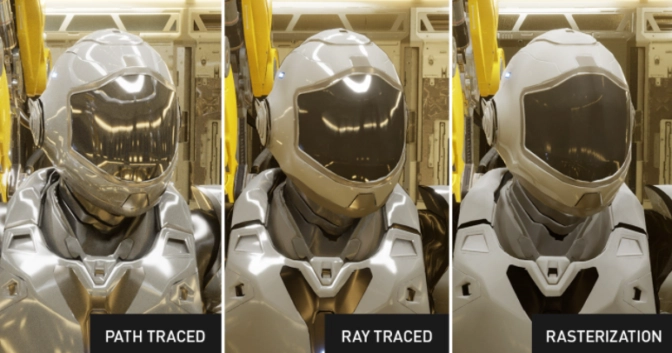

When benchmarking a graphics device, it is ideal to have a relevant workload. For example, if a game scene is using ray tracing for things like armor reflections, it makes a big difference if that takes a large part of the screen (as in close-up camera views) or a small part (as in far combat views).

A very important thing to consider is that the content in any kind of GPU benchmark, must be quasi-constant. Stressing the GPU in stages does not produce relevant results. A more consistent workload results in a more linear performance from the GPU, which is desirable as it minimizes measurement errors. Unlike gaming benchmarks that may exhibit workload fluctuations, standard GPU benchmarks like GPUScore’s Sacred Path are designed to maintain consistent and relevant workloads throughout the entire run.

Content type

Another crucial aspect concerning the benchmark’s content is the specific type of content utilized. For various purposes, different types of content are often used in benchmarks, such as procedural, elementary, or full scene. Since most benchmarks are using games as reference, naturally, game-like content is most often used. This is where game benchmarks have an obvious advantage.

In all games, the content is optimized, because it reflects the actual behavior in the game. While in standard benchmarks they mimic the game content that is used in games.

In addition to just ensuring that the content used in a benchmark is relevant, the performance results also depend on how this content is used (as mentioned in the paragraph above). Since a game benchmark is expected to use the same content that is used in the game itself, the content in the benchmark should reflect the way it is employed in the game. Randomly throwing around assets, is certainly not representative for the game. The content should reflect the real usage of it in the game.

Use of Latest Technology

An advantage of standard benchmarks over game benchmarks is the utilization of the latest technology. Standard benchmarks can incorporate emerging new technologies whose adoption levels are uncertain. On the other hand, games typically rely on established and proven technologies. Thus, while games are pushing the boundaries of development, it’s still the standard benchmarks that evaluate them first.

Benchmark Settings

When talking about benchmark settings, gaming benchmarks and standard benchmarks have different approaches to it. A gaming benchmark will offer the end-user more precise information on how to customize the graphics settings for that specific game. A standard benchmark on the other hand, can offer information about the optimal settings for any kind of workload in general. So basically, the difference between the two types of benchmarks resides in the approach.

The length of benchmark runs

The time or the length of the benchmark run is another factor that has a big impact on the result. A benchmark which runs for a long time might not be that appealing for users. Typically, a benchmark run lasting up to one minute is considered a positive and enjoyable experience. If a benchmark run exceeds one minute, it becomes boring and may feel like a waste of time. And if you must do several benchmark runs, then even a one-minute-long run might be too long.

One advantage, though, which longer runs have, is that sustained stress may bring the GPU to a constant level of performance, where the performance becomes constant. This is usually what happens when playing a game. When starting the application, the GPU is considered “cold”, which makes it perform better. As it heats up, the performance decreases, until it reaches a certain point from where it stabilizes. While there may not be a difference in the length of benchmark runs between gaming and standard benchmarks, it is still an important factor to consider.

So what type of graphics benchmark shall I use?

When taking all the points above into consideration, it’s evident that there are both advantages and disadvantages associated with game benchmarks compared to standard benchmarks.

If the critical requirements are met, it is clear that game benchmarks are indeed relevant, as they focus the performance evaluation on a single, specific use-case. However, standard benchmarks can provide a more comprehensive perspective at a general level. After all, it’s unlikely that someone would optimize their system solely for a single, specific game. But still, if that is the case, then the best practice would be to check how the intended configuration performs before purchasing it. And of course, the more comparisons one can do, the better it is.